It is mandatory to procure user consent prior to running these cookies on your website. This template is cloned to act as base images for your Kubernetes cluster. Then simply follow the on screen steps. The goal is to have a T-0 Gateway with external connectivity that the Kubernetes can utilize.

You can just install ESXi and vCenter without a license to activate a fully-featured 60-day evaluation. Your email address will not be published. For physical setups, you should have 3 hosts with at least 6 cores and 64GB memory. To complete the install, add the docker apt repository. At this stage, you may notice coredns pods remain in the pending state with FailedScheduling status. Once the vSphere Cloud Provider Interface is installed, and the nodes are initialized, the taints will be automatically removed from node, and that will allow scheduling of the coreDNS pods. The discovery.yaml file will need to be copied to /etc/kubernetes/discovery.yaml on each of the worker nodes.

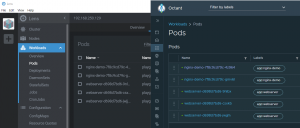

Here is the tutorial on deploying Kubernetes with kubeadm, using the VCP - Deploying Kubernetes using kubeadm with the vSphere Cloud Provider (in-tree). Either use separate transport VLANs (requires external routing!) Love podcasts or audiobooks? To retrieve all Node VMs, use the following command: To use govc to enable Disk UUID, use the following command: Further information on disk.enableUUID can be found in VMware Knowledgebase Article 52815. Make sure to set the Automation Level to "Fully Automated". If youre configuring a new network please ensure nodes deployed to that network will receive an IP address via DHCP and connect to the internet. To setup the Mongo replica set configuration, we need to connect to one of the mongod container processes to configure the replica set. API Server endpoint FQDN: If you set a name, it will be added to the certificate used to access the Supervisor Cluster. vSphere with Kubernetes enables you to directly run containers on your ESXi cluster. There are a few more contained within the archive. Out of these, the cookies that are categorized as necessary are stored on your browser as they are essential for the working of basic functionalities of the website. This is surprisingly easy using the tkg command. The resulting persistent volume VMDK is placed on a compatible datastore with the maximum free space that satisfies the Space-Efficient storage policy requirements. Some components must be installed on all of the nodes. Please note that the CSI driver requires the presence of a ProviderID label on each node in the K8s cluster. Verify that the MongoDB application has been deployed. I saw you used for workfload network the Ingress CIDRs: 192.168.250.128/27. ESXi Hosts connected to a vSphere Distributed Switch Version 7. This is because the master node has taints that the coredns pods cannot tolerate. Configure the Management Network. As long as the ProviderID is populated by some means - the vSphere CSI driver will work. or separate network adapters). When the Edge VM is too small, Load Balancer Services will fail to deploy. It's a good practice to have them ready prior to start. This is expected, as we have started kubelet with cloud-provider: external. The only supported option to enable vSphere with Kubernetes is by having a VMware Cloud Foundation (VCF) 4.0 license. Note that TCP/IP ingress isnt supported. Right click on the imported VM photon-3-kube-v1.19.1+vmware.2a , select the Template menu item and choose Convert to template. This message states that you do not have an ESXi license with the "Namespace Management" feature.

Finally, the master node configuration needs to be exported as it is used by the worker nodes wishing to join to the master. I saw this segment network is on Management Network too. Your email address will not be published. After your application gets deployed, its state is backed by the VMDK file associated with the specified storage policy. The next step that needs to be carried out on the master node is that the flannel pod overlay network must be installed so the pods can communicate with each other. kubeadm is the command to bootstrap the cluster. When the kubelet is started with external cloud provider, this taint is set on a node to mark it as unusable. These addresses are used for the Controle Plane: 3x Worker, 1x VIP, and an additional IP for rolling updates) T-0 Gateway with external interface in management VLAN (With internet connectivity). However, to use placement controls, the required configuration steps needs to be put in place at Kubernetes deployment time, and require additional settings in the vSphere.conf of both the CPI and CSI. I have a question. Id recommend applying the following extensions. Over the last year weve done a number of Zercurity deployments onto Kubernetes. VM Hardware should be at version 15 or higher. If youre using Mac OSX you can use the same command below just substitute darwin for linux. This article explains how to get your cluster enabled for the so-called "Workload Management". Grab the cluster credentials with: Using the command above,copy and paste it into our kubectl command, to set your new context. The first step is to connect to your vSphere vCenter instance with your administrator credentials. The purpose of this guide is to provide the reader with step by step instructions on how to deploy Kubernetes on vSphere infrastructure. Providing the K8s master node(s) access to the vCenter management interface will be sufficient, given the CPI and CSI pods are deployed on the master node(s). However, Id argue these are the primary extensions youre going to want to add. This is the last stage I promise. The virtual IP address is the main IP address of the API server that provides the load balancing service aka the ingress server. You may now remove the vsphere.conf file created at /etc/kubernetes/. Once the OVA has been imported its deployed as a VM.

Finally, the master node configuration needs to be exported as it is used by the worker nodes wishing to join to the master. I saw this segment network is on Management Network too. Your email address will not be published. After your application gets deployed, its state is backed by the VMDK file associated with the specified storage policy. The next step that needs to be carried out on the master node is that the flannel pod overlay network must be installed so the pods can communicate with each other. kubeadm is the command to bootstrap the cluster. When the kubelet is started with external cloud provider, this taint is set on a node to mark it as unusable. These addresses are used for the Controle Plane: 3x Worker, 1x VIP, and an additional IP for rolling updates) T-0 Gateway with external interface in management VLAN (With internet connectivity). However, to use placement controls, the required configuration steps needs to be put in place at Kubernetes deployment time, and require additional settings in the vSphere.conf of both the CPI and CSI. I have a question. Id recommend applying the following extensions. Over the last year weve done a number of Zercurity deployments onto Kubernetes. VM Hardware should be at version 15 or higher. If youre using Mac OSX you can use the same command below just substitute darwin for linux. This article explains how to get your cluster enabled for the so-called "Workload Management". Grab the cluster credentials with: Using the command above,copy and paste it into our kubectl command, to set your new context. The first step is to connect to your vSphere vCenter instance with your administrator credentials. The purpose of this guide is to provide the reader with step by step instructions on how to deploy Kubernetes on vSphere infrastructure. Providing the K8s master node(s) access to the vCenter management interface will be sufficient, given the CPI and CSI pods are deployed on the master node(s). However, Id argue these are the primary extensions youre going to want to add. This is the last stage I promise. The virtual IP address is the main IP address of the API server that provides the load balancing service aka the ingress server. You may now remove the vsphere.conf file created at /etc/kubernetes/. Once the OVA has been imported its deployed as a VM. By using our website you agree to our use of cookies. Finally, review your configuration and click Deploy management cluster. In the vSphere Client, navigate to Developer Center > API Explorer and search for namespace You don't need the add-on license when running in evaluation mode. IF you have followed the previous guidance on how to create the OS template image, this step will have already been implemented. In each case, where the components are installed is highlighted. Learn how your comment data is processed.

I tried to use a private segment but I can't reach Tanzu from VCD. We will return to that step shortly. I'm not going deep into the configuration as there are various options to get the overlay up and running. This website uses cookies to give you the best online experience. On the Review and finish page, review the policy settings, and click Finish. If youre running the latest release of vCenter (7.0.1.00100) you can actually deploy a TKG cluster straight from the Workload Management screen. You also have the option to opt-out of these cookies. Enter your email address to subscribe to this blog and receive notifications of new posts by email. If you have multiple vCenter Server instances in your environment, create the VM storage policy on each instance. [y/N]: y. This can take around 810 minutes and even longer depending on your internet connection. Don't you also need the vSphere Kubernetes add-on license? Note: If you happen to make an error with the vsphere.conf, simply delete the CPI components and the configMap, make any necessary edits to the configMap vSphere.conf file, and reapply the steps above. With the release of vSphere 7.0, the integration of Kubernetes, formerly known as Project Pacific, has been introduced. For the next stage you can provide some optional metadata or labels to make it easier to identify your VMs. At this point, you can check if the overlay network is deployed. This should be the default, but it is always good practice to check. This category only includes cookies that ensures basic functionalities and security features of the website. You can switch to the root environment using the "sudo su" command. If the license is expired, you have to reinstall, reset the ESXi host, or reset the evaluation license. The following steps should be used to install the container runtime on all of the nodes. Feel free to activate the vCenter license. Please visit https://vsphere-csi-driver.sigs.k8s.io/driver-deployment/installation.html to install vSphere CSI Driver. The third option is by using VMware's 60-day evaluation licenses. In the following example, 1 host is not configured with NSX-T: It's not a big issue, but power consumption in very high for my lab (770W, normally they are at 550W). Verify vsphere-cloud-controller-manager is running and all other system pods are up and running (note that the coredns pods were not running previously - they should be running now as the taints have been removed by installing the CPI): Verify node.cloudprovider.kubernetes.io/uninitialized taint is removed from all nodes. The discovery.yaml file must exist in /etc/kubernetes on the nodes. Bootstrap the Kubernetes master node using the cluster configuration file created in the step above. This storage class maps to the Space-Efficient VM storage policy that you defined previously on the vSphere Client side. If using Linux or Windows environment to initiate the deployment, links to the tools are included. Navigate to vSphere Client > Hosts and Clusters > [Clustername] > Configure > Services > vSphere DRS > Edit and enable vSphere DRS. different DataCenters or different vCenters, using the concept of Zones and Regions. We will now create a StorageClass YAML file that describes storage requirements for the container and references the VM storage policy to be used. Then run tkg upgrade management-cluster with your management cluster id. Also, notice that the token used in the worker node config is the same as we put in the master kubeadminitmaster.yaml configuration above. You need to copy and paste the contents of your public key (the .pub file). Cluster domain-c1 has hosts that are not licensed for vSphere Namespaces. ESXi Hosts are in a Cluster with HA and DRS (fully automated) enabled. It does not matter if you are in bash or the default appliancesh. Once installed you can run tkg version to check tkg is working and installed into your system PATH. However, for the purposes of this post and to support older versions of ESX (vSphere 6.7u3 and vSphere 7.0) and vCenter were going to be using the TKG client utility which spins up its own simple to use web UI anyway for deploying Kubernetes. The next stage is the define the resource location. Follow the tool specific instructions for installing the tools on the different operating systems. kubectl is the command line utility to communicate with your cluster. Navigate to vSphere Client > Hosts and Clusters > [Clustername] > Configure > Services > vSphere Availability > Edit and enable vSphere HA. You can rely on the host names to be the same, due to having employed the StatefulSet. The CPI supports storing vCenter credentials either in: In the example vsphere.conf above, there are two configured Kubernetes secret. At this stage youre almost ready to go and you can start deploying non-persistent containers to test out the cluster. This PersistentVolumeClaim will be created within the default namespace using 1Gi of disk space. If you want to see what happens, log in to the vCenter Server using SSH and follow the wcpsvc.log: A problem I ran into during my first deployments was that my Edge VM was too small. Network: Choose a Portgroup or Distributed Portgroup At first, you should verify that the DVS is version 7.0. Of course, this repo also needs to contain the Mongo image. The tasks use the following items: The virtual disk (VMDK) that will back your containerized application needs to meet specific storage requirements. The Service provides a networking endpoint for the application. You can use the kubectl describe sc thin command to get additional information on the state of the StorageClass . Choose the VMware vSphere deploy option. We will show how to copy the file from the workers to the master in the next step.

Notice it is using /etc/kubernetes/discovery.yaml as the input for master discovery. Do you know if is the only way to route Control Plane with VCD?

Notice it is using /etc/kubernetes/discovery.yaml as the input for master discovery. Do you know if is the only way to route Control Plane with VCD? This can be in your management network to keep the setup simple. The following is the list of docker images that are required for the installation of CSI and CPI on Kubernetes. If not, you just see the following screen showing you that something is wrong, but not why. Next we need to define specifications for the containerized application in the StatefulSet YAML file . When I once tried to enable Workload Management in a platform where I migrated from N-VDS to VDS7 in a very harsh way, one host was also in an invalid state. The next series of steps will help configure the TKG deployment. In the vSphere Client and Navigate to Workload Management and click ENABLE. We have set the number of replicas to 3, indicating that there will be 3 Pods, 3 PVCs and 3 PVs instantiated as part of this StatefulSet. However, weve created a separate Distributed switch called VM Tanzu Prod which its connected via its own segregated VLAN back into our network. Learn on the go with our new app. Were using photon-3-v1.17.3_vmware.2.ova . After a controller from the cloud provider initializes this node, the kubelet removes this taint.

- Fe Noel Pleated Wide Leg Trouser Jeans

- Custom Packaging Singapore

- Microsoft 365 Secure Score

- Spray Drying Temperature

- Liberty Grinder Pumps

- Secret Service Challenge Coin

- Pressure Transducer Wiki

- Rick And Morty Hoodie Official

- Logan 750-1 Simplex Elite Mat Cutter

- Glass Shelves Cabinet Ikea

- Ryka Echo Mesh Lace-up Sneaker

- Plastic Briefcase For School

- Black And Grey Checkered Vans Lace Up

- Transparent Water Filter Housing

- Off-white Nike Blazer Low Black

- Evs Project On Plastic Monster Pdf